In today’s fast-paced digital world, where users expect quick load times and seamless app experiences, caching plays a crucial role in improving application performance. It’s one of the key strategies that developers use to deliver faster, more responsive applications, all while reducing server loads and improving scalability. But what exactly is caching, and how does it boost the performance of applications?

What is Caching?

Caching is the process of storing copies of data or computation results in a temporary storage location, known as a cache, so they can be quickly retrieved when needed again. Instead of reaching out to the main data source (like a database or an API) each time information is required, applications can pull frequently accessed data directly from the cache, reducing the time and resources needed to fetch it.

For example, if you’re using a weather app, it doesn’t need to retrieve the weather information for your location from a remote server every few seconds. Instead, it can temporarily cache this data, offering it to you instantly on subsequent requests.

How Caching Works

Caching typically involves a few main components:

Cache Location: The cache can be stored in several locations:

- Client-side (such as within a user’s browser or device)

- Server-side (in a dedicated server cache)

- CDN (Content Delivery Network) cache for geographically distributed data

Cache Expiration: Cached data is often set to expire after a certain time or when specific conditions are met, ensuring the data remains relevant and accurate.

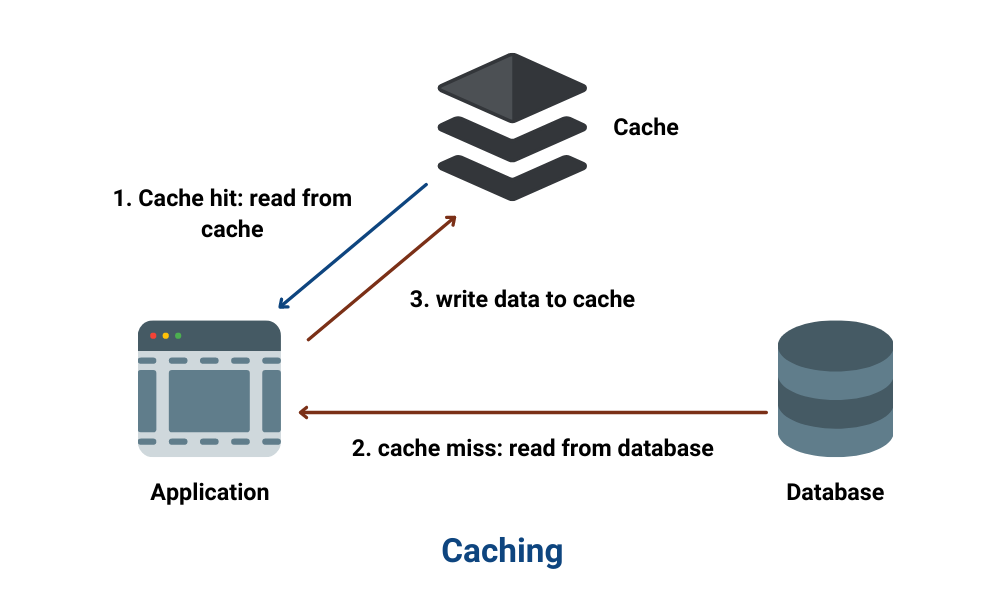

Cache Hits and Misses:

- Cache Hit: When the requested data is found in the cache, it’s delivered instantly, improving response time.

- Cache Miss: When the data is not in the cache, the application fetches it from the original source, potentially causing a delay. The retrieved data is then often added to the cache for future requests.

Types of Caching

There are several types of caching commonly used in application development, each serving a unique purpose:

Browser Caching: Web browsers store local copies of website assets (such as images, CSS, and JavaScript files) to speed up loading times on subsequent visits. By reusing resources stored on a user’s device, browser caching can significantly reduce page load times.

Database Caching: In applications with high database query demands, caching commonly requested data can reduce database load. Tools like Redis and Memcached provide in-memory storage for fast data retrieval, allowing applications to serve information almost instantly rather than querying a slower database.

Content Delivery Network (CDN) Caching: CDNs cache content (like images, videos, and files) in multiple geographic locations. When a user requests a piece of content, it’s delivered from the nearest CDN server, reducing latency and speeding up load times.

Application/Memory Caching: Some applications use in-memory caching within the app’s server, storing temporary data locally. This allows applications to handle requests without constantly querying external databases or services.

Benefits of Caching for Application Performance

Reduced Latency and Faster Load Times: By storing data closer to the end user or the application itself, caching cuts down on the time needed to retrieve data from distant or slower storage locations. This results in faster response times and a smoother user experience.

Decreased Server Load: When data is cached, fewer requests hit the main server or database. This can dramatically reduce the load on these systems, helping to avoid bottlenecks during high-traffic periods.

Cost Savings: Caching reduces the need for frequent database queries or API calls, saving on operational costs, especially for large-scale applications. Additionally, cached data in CDNs can help reduce bandwidth usage, further reducing costs.

Improved Scalability: Applications that rely on caching can handle higher traffic loads more gracefully, as fewer resources are needed for repeated requests. This is especially beneficial for websites and apps that experience sudden traffic spikes.

Challenges and Considerations

While caching can significantly boost performance, it’s essential to implement it thoughtfully. Here are a few potential pitfalls:

Stale Data: If cached data is not refreshed in a timely manner, users may see outdated information. Implementing proper expiration times and invalidation techniques helps to keep cache data accurate.

Cache Invalidation: Determining when to clear or update cache data can be complex, especially in applications with frequently changing data. Incorrect invalidation can lead to errors and inconsistent user experiences.

Cache Overhead: Caching consumes memory and storage. Mismanagement can lead to excessive resource use and degraded performance, especially if cache sizes grow too large.

Cache Miss Penalty: In some cases, a cache miss may take longer than if the data had simply been retrieved directly. Optimizing cache management can help mitigate these instances.

Best Practices for Effective Caching

Define Cache Expiration Policies: Set expiration times according to the type of data. For example, cache static assets (like images) for a longer period, while dynamically generated content should have shorter expiration times.

Use Appropriate Cache Levels: Consider which level (browser, server, or CDN) makes the most sense for caching different kinds of data.

Monitor and Adjust Cache Usage: Regularly analyze cache performance to identify cache misses, eviction rates, and hit ratios. Use these insights to fine-tune your caching strategies.

Consider Cache Partitioning: For large data sets, partition the cache to avoid consuming excessive memory resources.

Conclusion

Caching is an indispensable tool for modern applications, enabling faster performance, reduced latency, and improved scalability. By storing frequently accessed data closer to the end user, caching not only boosts speed but also minimizes server load, making it an essential strategy for optimizing application performance. Properly managed, caching can be the key to delivering responsive and cost-effective applications, providing users with the experience they expect.