Introduction to VGG Architecture

In the ever-evolving field of deep learning, convolutional neural networks (CNNs) have proven indispensable for tasks like image recognition, object detection, and video analysis. Among the many groundbreaking architectures, the VGG network, introduced by the Visual Geometry Group at the University of Oxford in 2014, stands out as a milestone. VGG demonstrated that depth and simplicity in network design could achieve remarkable performance.

This article dives into the architecture, its unique contributions, and its transformative impact on deep learning.

Origins of VGG

The VGG network was proposed in the seminal paper “Very Deep Convolutional Networks for Large-Scale Image Recognition” by Simonyan and Zisserman. Its development was inspired by the need for a deeper understanding of how network depth impacts performance. VGG focused on utilizing small convolutional filters while significantly increasing the depth of the network to extract intricate features from data.

VGG gained fame for its exceptional performance in the ImageNet Large Scale Visual Recognition Challenge (ILSVRC) 2014, securing one of the top spots.

Key Features of VGG Architecture

1. Simplicity in Design

VGG employs a straightforward approach, using 3×3 convolutional filters throughout the network. Unlike earlier architectures like AlexNet, which had varied filter sizes, VGG standardized this aspect, making it easier to analyze and implement.

2. Increased Network Depth

VGG’s hallmark is its depth. The architecture comes in various configurations, commonly known as VGG-11, VGG-16, and VGG-19, where the number indicates the total layers in the network. This depth allows the network to capture intricate patterns and hierarchies in data.

3. Uniform Convolution and Pooling

Every convolution operation in VGG is followed by a ReLU activation function, and pooling operations are applied consistently to downsample feature maps. This uniformity ensures regular feature extraction and computational efficiency.

4. Dense Fully Connected Layers

In the later stages, VGG uses three fully connected layers to aggregate the extracted features. This is followed by a softmax layer for classification.

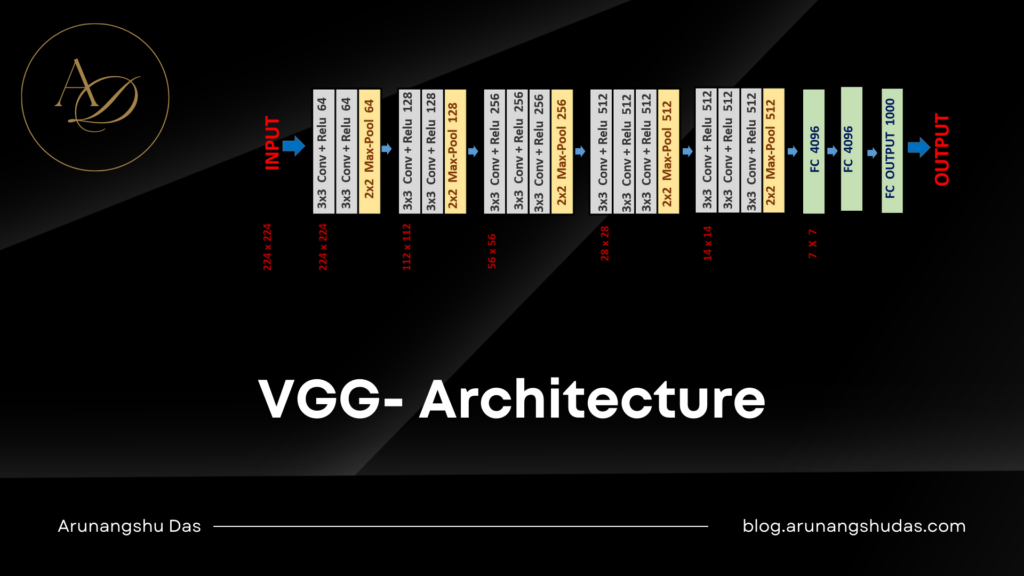

Detailed Architecture of VGG

Layer-by-Layer Breakdown

- Input Layer: The input is typically an RGB image of dimensions 224x224x3.

- Convolutional Layers: Each convolutional block consists of 3×3 filters with stride 1 and padding to maintain spatial dimensions. Several such blocks are stacked.

- Max Pooling: After each block, a 2×2 max pooling layer with stride 2 reduces the spatial dimensions by half.

- Fully Connected Layers: The flattened feature maps are passed through three dense layers, each having 4096, 4096, and 1000 (number of classes in ImageNet) neurons.

Example: VGG-16

For VGG-16, the architecture consists of:

- 13 convolutional layers

- 5 max-pooling layers

- 3 fully connected layers

- 1 softmax layer

Advantages of VGG Architecture

1. Modular Design

VGG’s standardized use of 3×3 filters simplifies the design process, making it modular and adaptable for different tasks.

2. High Performance

Despite its simplicity, VGG achieved state-of-the-art performance in image recognition tasks, proving that deeper networks can excel with proper design.

3. Transfer Learning Potential

VGG’s pre-trained weights on ImageNet have become a staple for transfer learning, enabling rapid development in applications like medical imaging and autonomous driving.

Challenges with VGG

1. Computational Intensity

The depth and number of parameters (around 138 million for VGG-16) make VGG computationally expensive, requiring high-end GPUs for training and inference.

2. Memory Usage

The dense layers and large parameter counts result in significant memory consumption, making VGG less suitable for deployment on resource-constrained devices.

3. Overfitting Risk

Without sufficient data augmentation or regularization, the extensive number of parameters can lead to overfitting.

How VGG Revolutionized Neural Networks

Simplification of Architectural Design

Before VGG, CNN architectures often used varying filter sizes and complex designs. VGG demonstrated that simplicity—using small filters and consistent design patterns—could yield excellent results, inspiring future models like ResNet and DenseNet.

Depth as a Game-Changer

VGG’s emphasis on depth laid the foundation for the “deep” in deep learning. This concept encouraged researchers to explore even deeper architectures, leading to innovations like ResNet’s residual connections.

Establishing Benchmarks

The high performance of VGG in benchmarks like ImageNet set a new standard, providing a baseline for evaluating new architectures.

Applications of VGG

1. Image Classification

The original purpose of VGG, image classification, remains its primary application, excelling in diverse datasets beyond ImageNet.

2. Object Detection

With adaptations like Region-based CNNs (R-CNN), VGG serves as a backbone for object detection models.

3. Feature Extraction

Due to its robust feature maps, VGG is widely used for tasks like style transfer and image segmentation.

VGG’s Legacy

While modern architectures like ResNet and EfficientNet have surpassed VGG in efficiency and performance, their impact remains undeniable. VGG taught the deep learning community the importance of depth, simplicity, and modularity.

Moreover, its role in transfer learning continues to make it relevant, especially for researchers and practitioners looking for pre-trained models.

Conclusion

The VGG architecture represents a turning point in the history of deep learning. By emphasizing depth and simplicity, it not only achieved groundbreaking performance but also influenced the design principles of subsequent neural networks. Despite its challenges, VGG’s contributions have left an indelible mark on the field, proving that elegance in design can lead to revolutionary advancements.

For anyone looking to understand the evolution of neural networks, VGG is an essential study—a model that paved the way for deeper, more powerful architectures.